What We’re Reading:

Well, maybe not reading, but listening to Humane Tech’s podcast “Your Undivided Attention,” Episode August 12, 2024 – This Moment in AI. If reading is more your thing, then here is the transcript .

EU AI Act:

The AI Act was published in the EU Official Journal on July 12, 2024. It entered into force on August 2, 2024 but most of its rules will begin to apply 2 years later on August 2, 2026. The Act is organized around uses of AI systems that are likely to product high risks to fundamental rights.

To be in scope of the Act, the AI system must have a sufficient level of autonomy to make decisions without direct human control. An “AI system” is defined as a machine-based system designed to operate with varying levels of autonomy, that may exhibit adaptiveness after deployment and that, for explicit or implicit objectives, infers (from the input it receives), how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.

In addition, certain types of AI systems are not within the scope of the Act, namely systems for the sole purpose of:

- scientific research and development;

- commercial research, development, and prototyping (prior to general commercial availability) that occur prior to the introduction of a product to the market;

- individuals using AI systems for purely personal, non-professional activity;

- AI systems used solely for military, defense, or national security objectives; and

- certain open source models.

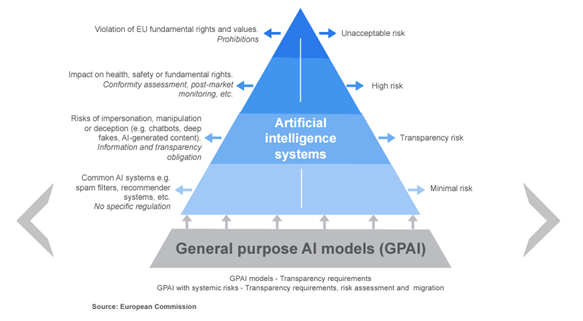

The EU AI Act focuses on risk and categorizes AI systems based on their capacity to cause harm to society. The obligations of AI system providers and deployers flow from an initial determination of the risk level of the AI system. Here is a helpful visual from the EU Commission.

For example, AI systems that violate fundamental EU rights and values are strictly prohibited and must be removed from the market. Moving down the pyramid, High-risk AI systems are not prohibited but are subject to strict requirements such as the development of a complete risk management program for the life of the AI system. At the bottom of the pyramid, AI systems that present minimal risk are not subject to regulation. Most AI Systems will fall into this category.